Further observations about physics – split files

(01) Introductory remarks

To start, I will make the following set of assumptions. The term "big bang" conjures up the impression that the universe came into being at a particular point in a(n eternal?) continuum of forward-time (firmly restricted to the 13.7 bn Yr ago to AD 2030 time-direction) and it exploded into some pre-existing spatial entity. This gives legitimacy to the multiverse idea where, somewhere over there and out of sight, countless other universes can exist – restricted to the same time direction. But space and time may represent an emergence that is strictly "ex nihilo" (out of nothing) from a zero-dimensional-zero-sized-zero-point-source that is outside of any process that could be described as time or space. The only thing that seems to be pre-existently "something" is the uncertainty about the exact nature of "nothing" – so that jitteriness emerges, differentially persists and accretes. Out of this uncertainty a full universe emerges who's net energy (probability distribution around the mean) is zero. For every positive probability a balancing negative probability exists that leaves its energy balance (concentrated improbability distribution) at precisely zero. Everything is "centred" around a virtual singularity of zero size, zero mass, zero time and zero momentum (the virtual implies that zero is also virtual – rather like Quad electrostatic loudspeakers that act as virtual point sources – see page 10 of the brochure).

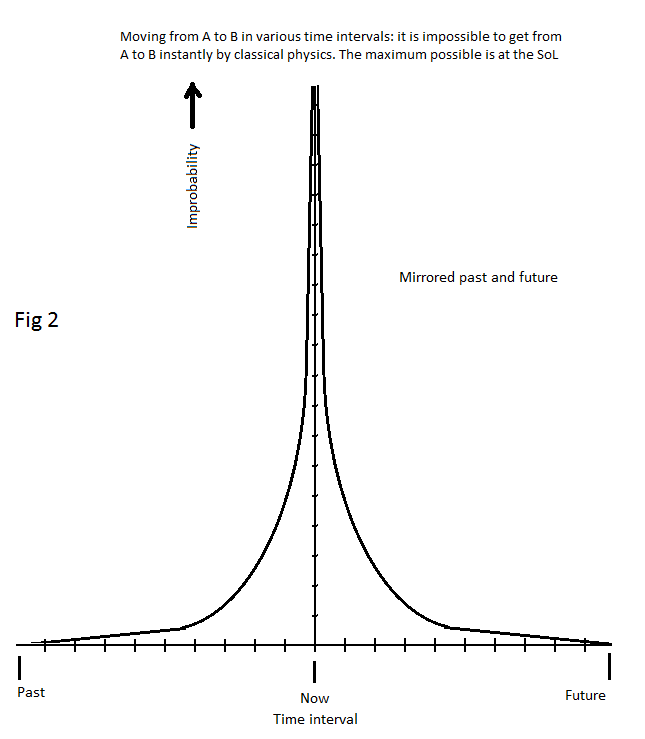

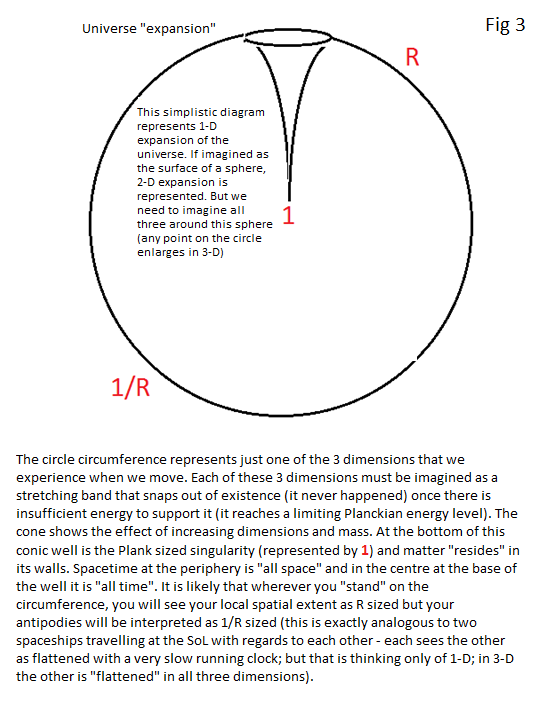

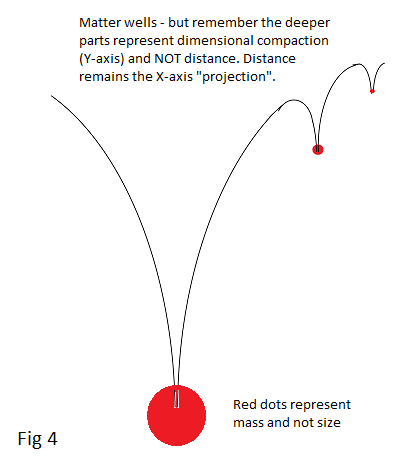

First: time and distance combine together to dictate how much energy is needed to move from one inertial frame to another (see New Scientist's 1 "One-Minute Physics" videos and select "How far away is tomorrow?"). So to move an object from [point A now] to [point B in the future] the following observations can be made. (To give image to this idea, imagine the object is a keratin fragment that has just fallen from the skin of your arm). A slow leisurely move from A to B will require low force and low acceleration. A faster move from A to B needs greater force and acceleration. To get from A to B almost as fast as a photon would require enormous force and acceleration. To get from A to B instantly is impossible in classical physics and if special relativity "rules" are strictly adhered to. So, the "effort distance" of getting from A to B, slowly, is much lesser than the "effort distance" of getting from A to B, instantly. The difficulty in getting from A to B increases with the shortening in the time interval allowed to achieve the move. Effectively, from here to there instantly is massively (pun intended) more difficult than getting there in an hour. (Even the keratin fragment would become infinitely massive if it was made to move there instantly.) So what about getting from A to B in minus one pico-second? Does that have any real meaning? It ought to mean travelling to 1 picosecond ago (that is 1 picosecond in the past). So what might the graph of all those negative seconds look like? (They have happened, so they should have some representation.) Two things can be said about this. First, moving a highly ordered macrostructure (like, for example, your brain) to a point 1 pico-second ago is going to be far, far, far more improbable than the same brain assembling itself – from its component particles – by sheer chance (reminiscent of the argument that says that a Jumbo-jet cannot "spontaneously fall together" from a collection of its component parts – it is vanishingly improbable). Could the graph on the negative-passage-of-time side look very similar to that on our positive-passage-of-time side? This should be, effectively, a reflection. To get from A to B instantly would require a virtually infinite amount of acceleration (then deceleration – note it is a cycle !!). It would not matter if the distance from A to B is just one millimetre. To get there less-than-instantly (say in minus one pico-second and in other words one pico-second ago – and this must be a real entity because it has just happened) would require "more" than an infinite amount of energy under the rules of classical physics – suggesting that it is "classically-impossible-physics" for even a keratin scale to move backwards into the past. But, Planckian time differences, Planckian distances and the resultant increasing probability of quantum "effects" might change this barrier (eg, quantum jumps, quantum tunnelling, quantum uncertainty). But at this acceleration-deceleration cycle our moving object would also become apparently minute, apparently supermassive and its "clocks" would, apparently, run exceedingly slowly (and even backwards, of course: this was the conjecture). So, the effort-distance travelled is traded from being something dominantly measured in metres to something dominated by mass – becoming supermassive and having a slow (or backward once "beyond" the SoL) running "clock". And yet it could still remain a combination of only one fempto-second and one fempto-metre away. So anything moving forward leisurely in time can quite easily look like increasingly big metric distances and anything moving backwards in time can look like smaller and smaller accumulations and concentrations of mass with a decreasingly slow running (or reversing) "clock". But, fundamentally, one fempto-second ago is still, potentially, just millimetres away even though it might be separated by an immense improbability barrier. As we go to shorter and shorter distances, a move from A to B instantly still remains classically "impossible". Once we get to Planckian time and distances, the quantum world can take over. We end up with an ultra-thin membrane separating now from the future and from the past and quantum leakage might occur – perhaps in a capacitative way across this "membrane" (so, real particles might capacitatively exchange their real for virtual characteristics across the membrane between past and future). And, ultimately, by analogy with refractance, any approach to the membrane that is not in a normal (right angular) approach could be reflected backwards in mirror image form. Perhaps this could be interpreted as the R to 1/R inversion of physical laws that the string theorists have highlighted (where the combination of high energy string vibrations in a large dimension can have the same energy as low energy vibrations in a small dimension. Later, I suggest re-thinking this to R/[statistical mean] and [statistical mean]/R). Here, the theorists see a transition from a vibrating string that is shorter than its dimensional constraints into a wrapped string that is longer than the dimensional constraints. This emphasises points about circular "motion". It can be confined to the circle itself; if the circle centre progresses along some extended dimensional element, it traces out what we then call a sine wave (this might be equated to a vibration); or, the circular/oscillatory form can be wrapped, spring like, around the surface/margin of a compact dimension. (See the diagrams below – they emphasise how each form can morph into the others). Now, this could equate to our "outside the electron shell world" that we experience as a 3-D environment (the R universe) within which the "reflected" 1/R world of sub nuclear matter establishes a stable standing wave juxtaposition of the 2-D encasement by electron shells. So, the "now" membrane between "future" and "past" would lie somewhere between the electron shell and somewhere within the atomic nucleus. Since the R world seems to be dominated by a 3-D universe, the physics of R and 1/R forms appear to be indistinguishable, we might expect an "inhabitant" of the 1/R world to perceive a mirrored 3-D universe; and that, in turn, leads to a conjecture that the nine dimensions conjured up in string theory might be the product of 3 sets of 3 dimensional matrices (equalling an apparent 9-D matrix). This could be strung out from the "opposing sides" of a past-present-future "brane". This "brane" would lie physically (as a distance) very close to all observers' "nows". But, as I interpret it, this implies that the past might just be a reflection of the future in a fashion corresponding to an R to 1/R juxtaposition. The analogy might be better restated as the "now"-point and this – though more or less continuous with adjacent "nows" – is "projected" back to the virtual singularity of both individual and collective "nows" (as in the sphere diagram below – the third down). This Planck sized (non-zero) singularity corresponds to the virtual centre of mass whether it be a particle, atom, planet, sun, galaxy or universe (and any intermediate "collection"). Multidimensional compactification at this point means that quantum jumps are a tiny fraction (in 9-D – or more) of 1-D quantum jumps. It is likely that these jumps, from a highly improbable to a more probable distribution, are what we equate to time. At the singularity there are so many 9-D jumps to the 1-D jump that it takes "forever" to achieve it (c. 13.7 bnYrs). This is reflected in the Universe "expansion" diagram below. "Now" will turn out to be a poor analogy – a fulcral point of maximum compactability is a better metaphor.

The important point to absorb about this "winding/vibrating" point is that there exists an R sized dimension with a low frequency vibration that is as energetic (statistically improbable) as a 1/R dimension with a high frequency vibration and could be described as a "reflection".

Now we can get a glimpse of what galaxy, solar and planetary orbitals might be about. To remain close to the "now" point, in opposition to the jittery world of photons, that are so light that they want to flee off at the slightest "nudge" and at (what we perceive as) the speed of light into the future (think of the metaphor of Brownian motions), our planetary systems seem to want to stay closely attached to what we see as the past (but it is this "now" point). The centre of the mass that constitutes a human body is (for the vast majority) less than a metre away from the immediate past. We already know it is possible to move into the future (arrive at your 100 year old son's funeral for example). To do so we would have to undergo prolonged acceleration and this is the so called "twin paradox" for spaceship travellers. But an enormous amount of energy (injection of improbability) is needed to achieve this. But, the barrier to the past is (classically) unachievable.

The view from within a nucleus of one of our constituent atoms (at the Planckian centre of its mass) may well seem as though they are much closer (than we perceive) to adjacent nuclei, to the centre of the earth, to the centre of the sun, to the centre of our galaxy and its black hole event horizon, and even to its "virtual singularity". It is only their centrifugal tendency that stops them from completing their collapse to a singularity. This is just a more metaphorical way of stating what Einstein introduced us to with warped spacetime. So, mass (multi-D) wants to disperse towards the past and condense towards the future. Light (1-D) wants to disperse towards (flee into) the future and to condense towards the past (imagine a big bang video seen in reverse as it accretes together and implodes to nothing). But the two might be reflected "back and forth" (in a static rather than oscillatory fashion) between the two virtual sides of a "virtual singularity" that are, in actuality, both extremely close to "now". And that "now" is relative to each and every A to B movement (remember that wherever there is a temperature, atoms do not stay at rest). To us, though, matter "appears" to be unequivocally condensing, and light unequivocally expanding into the universe's deepest corners.

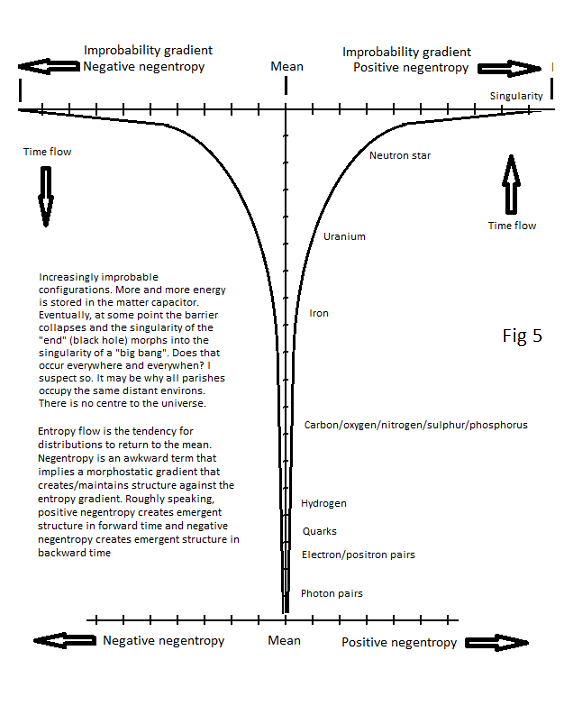

If we accept the earlier idea that the quantal objects that make up our universe are scattered around the mean and that the event horizon of a black hole is relatively close to the event that we call the big bang (effectively, a relatively short "effort distance" from it), then we can surmise that the negative time (the backward in time bit – the minus one picocecond all the way back to the beginning which is the "big bang") also has a similar asymptote. Now this double asymptote is looking pretty familiar and rather similar to a statistical distribution about the most improbable. If we think of the situation that exists in deep intergalactic space, then the probability of noticing a forward or backward in time event is, there, more likely than it is in our parish where we occupy an incredibly improbable state of negentropy (I will call this side of going forward in time "positive-negentropy" and going backward in time "negative-negentropy"). The higher up the asymptote we get, the more improbable (harder to get to by sheer chance) our condition is. However, this position that we humans occupy is quite likely to be one of very high order rather than just being highly (spontaneously) improbable. And the one at the bottom of the scale, one of very low order (bland sameness) that is also highly (spontaneously) probable (a very high entropy state). This puts carbon based life forms and the emergence of a technologically advanced society very high up the "functionally-ordered" scale. (Note that high entropy and low order – and vice versa – are not obligate "bedfellows": I have already pointed out earlier, in the entropy section, that "a highly ordered" and "high entropy state", can co-exist.)

The two asymptotes in this graph should never actually (classically) meet. They will get to a Planckian physical distance apart and at these scales we should be able to see backward in time events occurring as quantum fluctuations or jumps (and we do see them !!!!). But the overwhelming mass action effect of "positive-negentropy" will ensure that we never get to see much evidence of these events at the macroscopic level. Exceptions to this general invisibility probably include the observed outcomes of slit lamp experiments. Note that we already know that light cannot go faster (from our persective) than the speed of light BUT it can, instantly reflect at the speed of light without "flinching" – this could be very relevant.

I guess that, if it exists, the "join" between the future and the past when encountered at the mean in deep intergalactic space is mostly occurring at a very "bland-sameness" level. So, a question: towards the extremes of improbability and order, is a technologically advanced society, together with all its technological trappings, more functionally-ordered than a neutron star? And is it more functionally-ordered than a "sea" of electromagnetic waves "charging around at the speed of light"? And what about the conundrum of "the big bang" – that was simultaneously very bland (near uniform) yet unbelievably improbable; viewed afresh from the above perspective, this might not look quite such a contradiction.

The implication of this double-asymptote diagram is that it is a shorter "effort distance" to move into the future, down into a black hole then back through "the mean" and then into the past so that we might arrive at a point 1 pico-second ago. For macroscopic structures, going straight across the double-asymptote-barrier could be a similar if not higher energy (improbability) barrier. (Note that we humans like to think of the possibility of time travel whilst maintaining our highly improbable configuration – i.e., a structurally-intact-thinking-barely-aged-human-being). And it is extremely unlikely that we could ever enter "negative negentropy" whilst retaining that formed state (we would be long dead, decayed and dispersed to a particle or even electro-magnetic wave mish-mash). In, fact, it looks like we would just be reliving our historical emergence from star dust, to amoebae, to fish, to quadrupeds and finally to humans and our own parents getting together to create us.

So what might represent this barrier – this 2-D membrane – between the future and the past? It is tempting to see this, at least on one side, as the combined 2-D spheres of the electron shells – particularly the inner electron shell. Each electron shell represents a higher and higher barrier to "penetration". The inner 2 electron shell (the hydrogen atom like shell) is the ultimate free electron barrier that separates the past from the future. That leads on to a thought that time might be the reciprocal (the 1/R equivalent) of what we regard as distance (the R equivalent) that we perceive as part of the extra-electron-shell world and it is the "distance" that we feel at home with (rather than "time" as the distance). Within that shell – inside the nucleus in particular, dimensions are closed down (or wound up) and time slows and there are hints that entropy can appear to be reversed with initial accretion in the future and dispersion in the past. So, across the entropic mean, positive neg-entropy is characterised by the R "universe", by "effort-distance" (space-time) that is predominantly "felt" as metres and it is dominated by negative charges on the outside of atoms; on the other side of the mean, the negative neg-entropy side, "effort-distance" (space-time) is dominantly appreciated (by us) as time, it is characterised by the 1/R universe, it is intra-nuclear (within the atomic nucleus and probably "beyond its virtual "centre of mass" singularity) and it is dominated by positive charges. But these positive charges are possible virtual loans from the other side of the "now" point that, if they had not crossed the "now" barrier, would be occupants of a positive charge on the outside universe.

######## all above as one and probably some belowNow the double-asymptote-barrier mentioned earlier conjures up the metaphor of the previously mentioned six sided dice. Seen from one side only, there is never a zero dice throw (only -3 -2 -1 or +1 +2 +3 where opposite faces always but always add up to zero). Electromagnetic waves (or string structures), the metaphorical dice equivalent, should, therefore, always come as matched "pairs" of equal but opposite magnitude that really do sum up to absolute zilch. It is tempting to ask if that might be why we find it hard to find magnetic monopoles. Space itself might be the construct of multiple pulses of circular magnetic fluxes – multiple rings/spheres of "space" that "overlap and coalesce". Everything is cyclical (wave like) – including the ultimate and largest cycle that joins the "beginning" (big bang) to the "end" (black hole). I am tempted to think of an electron as a unit composed of an R plus 1/R pairing. This pairing is the backdrop on which the standing wave of electron matter is composed. The R element is a supermassive disk of "positive" electromagnetism dispersed through space (so we don't appreciate its "positivity" and the 1/R element is a miniscule disk of "negative" electromagnetism that has "leaked" to the other side. The electron negative disk can wrap around a tiny positive (nuclear charge) but this sphere size dictates its "string" wavelength that corresponds to the "reflection" in an anti-matter world of our "past" (see below) of the R radius of an antimatter magnetic field. This helps to make it clear why magnetic monopoles are hard to find – we would need to look for them at an R sized object. Note that all this suggests that the very fabric of METRIC space is created by the R representative of this (opposite disk face) pair. They cannot interact to slide back (as electromagnetic waves) to nihil/zilch UNLESS we can expose an electron to a positron (for example). Then the two 1/R components are positively and negatively charged and the two R components are similarly Positively and negatively charged. They can then "collapse" instantly back to the mean – but seem to do this – from our perspective – the "long way around".

In a quantum world, extra dimensions "deeper" than 1-D might be expected to never fall to zero but to some (virtual?) miniscule but finite Planck sized distance. So for entities largely confined to a 1-D "existence", distances may not be completely devoid of some trading off of their dimension for time. There could be a tiny trade off and this might contribute to the observed limit to the speed of light. Time is – in the limit and once unleashed from the multidimensional cages of atoms – returned pretty much to just a distance (and, of course, vice-versa!!). The energy that becomes tied up in matter may be the consequence of its "dive" and "condensation" into 2-D, 3-D, 4-D etc dimensionality. This just might help contribute to the observation that light always seems to move away from higher dimensional conformations at the "speed of light" (3-D for us – we cannot measure the SoL without a 3-D instrument of some sort). But this velocity is only achieved in the absence of intervening "matter" – ie, in a vacuum. It is already believed that matter is the product of standing waves and standing waves regularly form where two or more waves are travelling in opposing directions. Ultimately, if an electromagnetic wave is a loop filament (connected at it's "birth" between the upper face and lower face of our metaphorical dice), then we need to conceptualise what happens when positive and negative energy interact, unravel multidimensional stable standing waves and allow the collapse of an "existential loop filament" (an annihilation?). So, from a photon's view, its companion photons can be across the universe in an instant. From our view, we are left looking at the consequence of the collapse of the existential loop and the loosened filament is now "somehow" affected by a return "journey" in reverse time back to its origin where it rings (oscillates) on arrival in the past (what we would consider to be the origin and an instance of "cause and effect"). So could light always appears to arrive at the target at the speed of light because it represents the released end of the filament actually leaving the "target" in reversed time. There might be some value in developing and refining this metaphor. Maybe not, however. I will leave this to the reader.

This gives us a better feel for what might constitute forward in time entropy and backward in time entropy. Remember, time is probably just a distance but, unlike the time taken for a 1D light beam to get to the moon and back, distance that goes through many dimensions is characterised by an increasing trading off of simple distance (1D space) into (multi-D) spacetime – in which our (human) perception becomes largely focused on time. They are both just distances (or probably – and more exactly – effort-distances). So what we would consider to be forward in time is (effort) distance travelled in the direction "mapped out" from its origin in the multi-D "core" (denoted as 1/R in the diagram above) to the periphery of this expanding light (1-D radius) "sphere" (and denoted by R). So travelling "backward in time" is equivalent to moving progressively more deeply into multidimensionality (where gravity increasingly "dominates" our experience of it). Ultimately, returning to the "beginning" would occur at or within the event horizon encircling a (virtual?) singularity – a black hole. At this point the capacitor "dielectric" may break down and this would act rather like a water reservoir's overflow funnel.

It might be useful to think of a wave as a circular phenomenon; a pulsating flux of magnetism (an expanding then contracting ring of alternating N->S then S->N fluxes) that alternately expands then contracts into 2-D "space"; this then "pushes out" an alternating +ve then -ve charge that is in step but at right angles to the magnetic flux; then we need a "rail" ("strings" or "filaments" in space) so that the pulsating magnetic flux can work its way along the "rail", "away" from its creation-point (rather like those railway handcars, often seen in old western films, that are hand "pumped" along the rail). This "rail" should be at right angles to the other two (Maxwell). It might just represent the superimposition of our metaphorical handcar over a much larger electromagnetic fluctuation. Whatever, it is in this arena of electromagnetic oscillations that the condensation of persistent matter should begin to emerge out of the quantum foam that is a fundamental property of tiny distances.

The requirement for the theory of inflation is predicated by ourselves when we insist on a unidirectional straightjacket for time. If our baryonic universe is an emergent phenomenon, then there may be no need to dive closer to the "virtual" singularity than the event horizon. The forward and backward flows that create the standing waves of matter would "hinge" around the event horizon or to a virtual (but non zero) point – a virtual singularity. The passage of time at an event horizon is virtually at a standstill; so, if this was first formed early in the history of our universe (our straightjacket for time suggests 13-14 billion years ago), its time, from its own perspective, is very much closer to the "big bang" than we are. Could this mean that, at least, some Hawking radiation is released in our past, closer to the "big bang". In extremis, if the black hole started to accrete at the "moment" of the "big bang", then Hawking radiation could be part of that radiation. In this view, the present and future could all be "going down the plughole" back to the beginning. But all this apparent "movement" may simply be a parochial illusion as we have to claw our daily lives up the "falling steps" dominated by electron shell repulsion (just to stay still); this is our most immediate and dominating encounter with the "entropy driven" repatriation back towards nihil/nothing. Photons do not experience any passage of time (effectively an electromagnetic wave is able to transfer an energy packet – a statistically improbable occurrence – across vast distances of space). Rather than time being "slowed" to a virtual standstill (as at the event horizon) photons have traded virtually none of their 1-D distances into creating a time interval (one metre is 3.3 nanoseconds and 3.3 nanoseconds is one metre; it is impossible, in 1-D and in a vacuum, to be greater or less than this; excepting when we drop to Planck sized distances, time and distance are synonymous). It is only when we try to measure the time it takes to move one packet of energy on a wave from one piece of baryonic measuring apparatus (a set of standing waves) to the next (set of standing waves) that we impose an apparent velocity on the process. To interact, the photon's energy packet has had to dive down into a multidimensional realm where some distance is traded for "time".

Quantum jitteriness could represent a constant uncertainty of exactly where a wave "is" on a "string". This could become manifest, initially, as apparent 1-D "translocations" consequent on the occurrence of variously improbable quantum jumps.

(From our parish!!): at one extreme we have 1-D (occupying) light waves/photon complexes. These are so light that they form a perpetual "Brownian motion" like dance. The slightest disturbance will send them careering off at the SoL into the future.

At the other extreme we have concentrations of mass that, if projected back to a virtual singularity, occupy Planckian multi-dimensional volume and extremely small size. Unlike photons, that take virtually no effort to accelerate to light speed, such a singularity is "infinitely hard to set in motion.

These contrasting properties echo fundamental aspects of the quantum world. With light, we can know how fast it is going but can't tie it down to any place. With a massive singularity, we can know where it appears to be but we cannot attribute it any speed.

This following point could prove to be what is – essentially – a tautology. When we play around with electrical circuits, if we want to store electrical energy, we employ capacitors. Now, the closer that we can get each plate of the capacitor together without touching or allowing the electron-excess/electron-deficit to cross the gap, the more energy we can store in that capacitor. There comes a point, though, where the potential difference between the plates grows so much that the capacitor's dielectric barrier (air, dielectric material) breaks down and the energy just dissipates into a flash of photons and heat. Now, the double assymptote pictured above, between "the past – representing the positive charge" (one "plate"), "now" (the dielectric) and "the future – representing the negative charge" (the second "plate"), should act just like a highly efficient capacitor that is capable of storing vast amounts of energy (what we recognise colloquially as e=mc2). What constitutes "the past" and "the future" will not quite be what we naively expect from our parochial experience of time. It will be "coloured" by positive-negentropy and negative-negentropy and will be, ultimately, closely linked to effort-distance. So the whole concept of time may need to be reinterpreted as a function of distance and quantum uncertainty. (Nb, equate this capacitance to the energy available in a group of hydrogen atoms and with how hard it is to release it.)

Now, we have a situation where highly improbably distributions of energy (itself a statistically highly improbable distribution) have "emerged". If the SoL limit is pretty much absolute then crossing the dielectric (now) becomes increasing hard the closer we try to move from A to B instantly (or even just into the past – an even higher spontaneously improbable barrier). So, the docking of fresh energy (photonic wave packets) into the "capacitor plate" is likely to be a very fussy recipient. Might it be that, like entering earth's orbit from deep space, the approach conditions need to be (Goldilocks style) just right (and vice versa for leaving). The speed of light limit that we are accustomed to may be more a property of the "capacitor plate" (the 2-D spherical electron shell) than the filament that carries the photonic wave packet. We already know that the orbital frequency of electrons dictates the frequency of photons released from this shell. Some property of the conversion of the 2-D rotational speed into the 1-D photonic wave packet speed must mean that, whichever electron shell ejects the photon, it is converted to the same 1-D photonic energy packet speed. Could the SoL limit be dictated by electron shells rather than – as we traditionally see it – an intrinsic property of "light itself" (that we tend to regard as a single entity but it is a conglomerate of different factors, for example carrier filament, wave packet, frequency, propagation speed, wavefunction, oscillating charges and magnetic fields). Only finely tuned wave-packets may, then, be capable of contributing energy (higher improbabilities) into to the grand matter-capacitor. This needs thinking through but may be important. I am not aware of any method of measuring the SoL that does not involve interaction with an electron shell.

This leads into a consideration of the top and obverse sides of our metaphorical dice. I have already implied that the positive and negative sides of negentropy that are distributed around the mean must be in perfect, absolute balance. There should be no transient borrowing here (that CAN occur when we look at just the top sides of the dice or vice versa). A positive deviation around the mean MUST be perfectly balanced with a negative one if the principle of generation "ex nihilo" is to remain strictly balanced. So what features constitute the positive and negative deviants that arise "ex nihilo"? One option appears to be two waves that can, theoretically arise out of nothing provided they shifted through 180 degrees relative to each other. Such waves can also annihilate completely. Another possibility is that the string theorists' R and 1/R universes represent a perfect balance about R/R (this should equate to 1 unit – probably the Planck length – nothing can be smaller – so like our 6 sided dice, zero values never occur). That puts the fulcrum clearly at the Planck distance and this would fit nicely with our atomic capacitor being close-ish (by our macro-standards) to this point. Furthermore, we can now conjecture that the progression from R to 1/R goes something like this (1-D to 9-D representing one dimensional to 9 dimensional):

R R1-D R2-D R3-D R4-D R5-D R6-D R7-D R8-D R9-D R/R (Planck scale) 1/R9-D 1/R8-D 1/R7-D 1/R6-D 1/R5-D 1/R4-D 1/R3-D 1/R2-D 1/R1-D 1/R

Nuclear matter will accumulate somewhere towards the centre of this sequence (at least partially on the 1/R side) and the transition – through or towards the R9-D to 1/R9-D transition, will occur somewhere apparently within the depths of the nucleus of an atom. And, if you were an inhabitant of the 1/R world, you would probably regard yourselves as the R version and us as the 1/R version. The cone that subtends back to the beginning (the "big bang origin" in the above diagram) would have the R dimension on the outside of this light sphere with this sequence running down towards the origin of the light sphere. Quite what happens once we are down to R9-D is not obvious from the current conjecture and needs consideration. I will guess that the R9-D to 1/R9-D transition requires the severest of improbabilities and equates to a black hole/ big bang "virtual singularity", whilst the matter that constitutes the environment of mother Earth does not dive so deep that the "storage capacitor" "breaks down" to allow the ultimate total release, back into photons, of the stored energy. (Note that to confine an electron within a nucleus would require a phenomenal 3.77 GeV; CERN can reach into the TeV range and still – apparently – be short of creating a black hole.)

Ultimately, in a grand explanation of what is happening, I suspect that we will have to get rid of the millstone idea that time is "real". By that, I mean that time is considered something where the past is gone and ceases to exist and the future is equally non-existent until it has has happened. In this scenario, the only real "events" are so transient that they are long gone within femtoseconds. Now, Einsteinian physics already suggests this is a parochial view because different observers observe different "nows" and these "nows" clearly remain inter-dependant. What the "giant capacitor" idea does is to give some feeling for how distance is spread out in a matter containing universe. In reality, everything probably exists "stat" – the past, now, the future, space and matter: they are all there and imprinted within the "mathematics". This is comparable to a DVD of a computer game – everything is "on the disc" and stays there even though the game can only be appreciated (by most punters) when played in "real-time" and to them it appears to be highly versatile in its output.

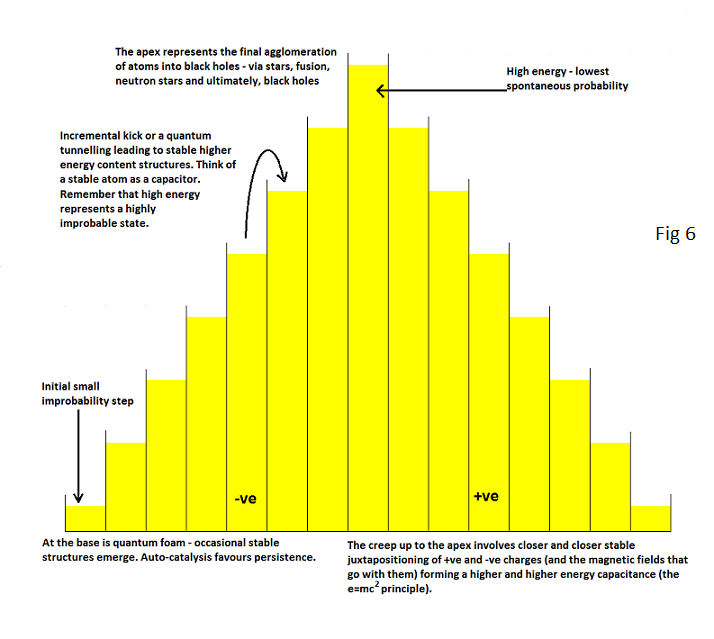

The emergence of matter and intelligent life must "grow" in incremental steps. Linnaeus' statement "Nature does not make jumps" could be rephrased into "Nature does not make many big, improbable jumps." Emergence occurs through sequential steps of gradually increasing interactive-complexity that are small enough to allow some sort of "ladder" (remember the previous observation that on either the top or the obverse sides of our metaphorical dice, highly unusual one-sided distributions can occur by sheer chance, whereas the sum of the front and obverse sides of the dice, when added together, always returns a value of absolutely "zilch"). Having a configuration that allows balanced positive and negative neg-entropy to reside closely side by side (the matter capacitor) without "touching" (annihilating) enables a series of progressive steps. Indeed we can see these steps occurring in the production of hydrogen, helium and lithium (big bang), then the ignition of nuclear fusion leading on to the creation of heavier elements (carbon, oxygen etc), then supernova explosions and the creation of even heavier elements (iron being very important to our existence), then the collapse to a neutron star (electrons and protons squeezed to nuclear size to form neutrons (but still "held apart") and – finally – the conditions where the capacitor gap (perhaps) breaks down and what ensues is a singularity that tracks back to the beginning. The outcome is, potentially, a circuit where the uncertainty principle generates endless virtual photons and "heavier" particles that may persist, occasionally, either side of the mean (in balanced forward and backward "time"), evolve into galaxies and eventually disappear down a black hole plug hole before being re-circulated back to the general quantum foam. What "persists", counter to this flow, is a morphostatic structure of galaxies stars and planets with occasional inhabitants that maintain their own form by various feedback processes. Don't forget that the flow is occurring both in (what we consider to be) forward and backward time depending on whether it is extra-electron-shell or intra-nuclear.

In this scenario a technological society might even be able to establish the conditions to ensure its own "creation" (either knowingly or unknowingly). This society has the potential to close out the time loop by initialising the configuration and conditions that would allow itself to emerge in the first place: but that is extremely, extremely, extremely conjectural.

So how could emergence from quantum foam occur? Current concepts are riveted to the belief that time is a real entity that is independent of anything else (that is, it is not an illusion brought about by the way other "forces" affect matter). These concepts are riveted to the assumption that this systems only go forwards in time (1912 to 2012 direction and without exception). However, this apparent time direction is imposed on our senses (in a domineering way) by electrons and electron shell repulsion. This and the "release" of electromagnetic radiation that can then track off into the deep voids of space clearly point to an apparent single direction in which entropy increases. However, I have already alluded to the possibility that gravity affects atomic nuclei and baryons in a way that could be interpreted as a "backward in time" dispersal (back to nihil, zilch). If this is possible, then to fall down the +ve side of the diagram below has to be done in what we consider to be a 2012 to 1912 direction. But the past (big bang expansion) and the future (black hole collapse) may be much closer than we imagine – if not "the same phenomenon" viewed from two alternative perspectives. If this were the case, then we now "see" evidence suggesting cosmic inflation because we refuse to consider it as part of a counter-current flow of two differing entropies that affect all matter. To accept the scenario that all matter "exploded" in the instant of a big bang is ludicrously more an improbable event that a jumbo jet falling together from its constituent parts (even more improbable than one punter winning, by sheer chance, all the worlds top national lottery prizes every week for a year). To get around this we have to imagine multiverses that test out all the various possibilities until one bubble universe emerges that is just right (Goldilocks stuff). But, evolution and emergence are well proven permissive systems – provided that the constituent steps are reasonably probable in the population from which they get selected. Given the option of which one to prefer, I know where I would like to place my lottery bet. And experience suggests that patterns are reiterated throughout the universe. If biological evolution (the auto-catalysis that leads to emergent systems) can do it then the atoms and constituent waves, from which biological molecules are constructed, can almost certainly imitate this step.

Note that our current view of inflation tries to stuff the right hand (+ve) entropic stairs into a "1912 to 2012" time direction. So we could be interpreting +ve charge entropy as an apparent emergence from a Planck sized singularity up towards the event horizon.