Entropy

A non physicist's interpretation

(This piece is relatively new and likely to evolve; it will probably change frequently.)

First, why is this discussion of entropy here at this site on immunology? Well, it seems to me that "beating back the tide of entropy" has a large part to play in the function of the immune system (or the morphostatic system). This article is an amateur attempt to make sense of what is a physicist's domain and it must be read as such – there are bound to be wrong assumptions and errors; and, discovering them is the way I learn and understand.

There appears to be substantial confusion around entropy and its (often assumed) association with order and disorder. And, to some extent this confusion is understandable – and justified. It is worth getting this clear. Entropy is a term that was introduced in the wake of the industrial (steam) revolution; the concept grew up around heat engines and their efficiency. So, it is most often introduced whilst considering the dispersion and equilibration of the energy in steam engines or in internal combustion engines. Ultimately, however, entropy is nothing more than a measure of the progressive change of a system (composed of multitudinous quantal objects – like molecules in motion) away from its initial (and also its most recent) "structure". The source of the energy "colours" the interpretation of entropy in different systems. So heat, electrical charge, electromagnetic radiation and chemical reactions can "colour" the entropic systems that relate to them. And, Shannon introduced us to the idea of information entropy – a problem that bedevils (transmitted) information. In the case of steam, the initial "structure" is usually a concentration of energy (heat and pressure) in a compartment (two separate closed systems up until the moment we open a valve) which is then used to power the system that comprises our steam engine. Entropy is not a measure of energy levels or of energy "loss".

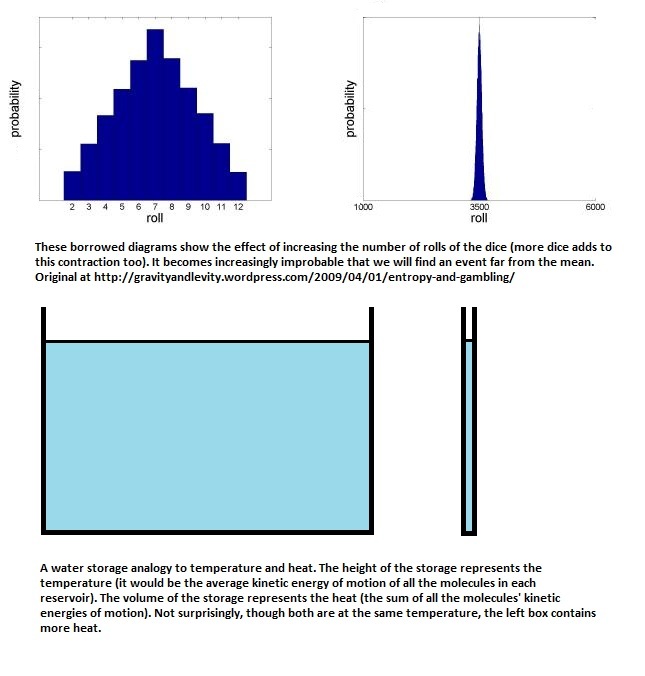

Several analogies have helped me gain a better understanding of entropy. The first is a useful introduction to some of what it involves. Consider a perfectly "insulated" box; we'll define this as a perfectly closed system in which there is no net loss or gain of energy through its walls; the total energy within the box remains constant. Consider an "engineered" situation in which the initial distribution of the temperature is polarised. Some mechanism must be used to isolate our high energy source from the sink to which it will eventually flow; so our hypothetical box only becomes a single system once the energy is allowed to flow. (Note the common feature that certain systems remain isolated until they are "switched on" in some way – e.g., a tap, a switch or ignition: and even a mixture of diesel and oxygen molecules remain, effectively, energetically isolated constituents – or "compartments" – until ignited.) Suppose that the upper front, left hand side of our perfectly isolated box is kept at a much higher temperature (and/or pressure) than the rest of the box. This establishes a potential difference of temperature (and/or pressure) – an energy gradient. By employing some ingenious mechanism to tap this potential difference (forthwith PD), work can be extracted as this temperature (and/or pressure) differential flows, disperses and equilibrates. (Entropy flow only occurs in actively equilibrating systems.) We are able to divert some of this energy flux to do work for some human purpose (e.g., drive a steam engine). Once the work has been extracted, the temperature gradient throughout the box will move towards equilibrium by the progressive and uniform dispersion of heat throughout the box. The end point is when the distribution of molecular kinetic energies is, both within large ("macroscopic") and small ("microscopic") areas, very much the same throughout the box. Then there is no further flux of energy available from which to extract work or to use for some structured function (like turning a turbine). At this "end" point, the system has settled into a state of high entropy (maximal energy dispersion and equilibration: or energy homogenisation). Note that it is the average kinetic energy of motion of the molecules that determines temperature and it is the total combined kinetic energies of motion of the molecules that determines the amount of heat (see the diagrams below – note that the temperature of the thin tank could be twice as high and yet still contain less heat; nevertheless, it is the temperature that determines the "direction" of entropy flow – a high to low temperature flow results in a low to high entropy transition).

For the second analogy, imagine a screen made up of many small (pixel like) discs that are free to move laterally in the plane of the screen (but not to flip over – the relevance of this becomes clear later). Let's arrange it very much like a high definition TV screen with tiny individual discs acting as pixels in the screen. Initially, just to emphasise a point, we'll number them in sequence, top row left to right, then second row and so on. These discs can be agitated (note that agitation is energy dependant) so that their local positions can progressively migrate (like a form of Brownian motion). They will gradually creep away from their original positions and will do so every time the screen is agitated. The initial numbered sequence will "never" return (such a return does remain possible but it is vanishingly improbable). Now, let's arbitrarily stop the agitation and imprint a copy of a famous Constable over the screen (remember, this is reproduced over all the individual faces of these discs/pixels). Now let's recommence the agitation. Gradually, the Constable will lose definition and eventually take on a hue that becomes a mixed average of all the original colours in the Constable. If the agitation is slow (a temperature analogy being that it is near absolute zero) the degradation of the Constable image will be very slow. If the agitation is fast (the temperature analogy now being that it is near the boiling point of water) the changes will be more rapid and the "equilibrium" hue will be reached much more quickly. Now, let's stop the agitation long enough to (arbitrarily) imprint a new image – this time I'll use the black print of a Shakespeare sonnet on a white background. Now, over time, this too will "degrade" into a homogenous grey appearance. The original structure has again "degenerated" towards an average (the mean). This loss and repetition of the imprinting process could go on indefinitely. The big point is that any process that "imprints" large scale patterning in our universe will inevitably "degenerate" (by our function hungry perspective) towards a bland microscopic sameness and all macroscopic patterns will experience a "pressure" to return towards the statistical mean. What is happening is microscopic mixing leading to macroscopic homogenisation. What is lost are meaningful macroscopic patterns (that is, meaningful to us !! – for example, the picture or the prose). With a different analogy, it could be a loss of "functioning form" (eg, the concentration of a high PD in one or more sectors of a box; or the deterioration in the structure of an elegant building). There is, effectively, a pressure on the constituent quanta that make up the macro-structure to disperse (and in our perception, to degenerate). The change is not only progressive but it is continuous. It is constantly changing, away from the most recent arrangement of quanta, and this change means that differences eventually become appreciable only at the minutest (quantal) level. This depends upon the energy driven agitations continuing or some fundamental basal agitation remaining (e.g., a temperature that is above absolute zero or, ultimately, the agitations inherent in quantum foam). This process results in a "perpetual" mixing of the constituent quantae. Every one of these redistributions, however, is most likely to leave the system looking like a homogenised whole that is only non-homogenous when a small group of quanta are viewed "under a microscope". Our parish in this universe is "occupied" by many systems that are in an exceedingly improbable state. Consider a living animal body, for example; any "rearrangement" of its constituent "bits" is more likely to be towards a more probable distribution than towards an improbable one. That is why it needs complex morphostatic mechanisms to maintain it. It is these exceedingly improbable "starting points" that encourage us to equate entropy with disorder; but these are parochial conditions in the extreme and are absolutely atypical of the average conditions in the vast volume of universal space. Because our environment is subject to a constant shift away from the latest configuration, anything that we order will, under the influence of entropy, find itself under a pressure to become disorganised – unless we refresh the order (morphostasis). Entropy is the natural antagonist to order. But disorder is NOT synonymous with entropy, it is only a consequence of the pressure exerted by it. Equating entropy with disorder is positive, assertive presumption that tends to miss the point. Order is important to us humans; entropy – by homogenising everything – inevitably leads to the degradation of order. But, in the first place, order starts out its existence as a highly improbable statistical distribution and it needs active maintenance if it is to persist.

This quantal (statistical) perspective differs from seeing the dispersal as simply the effect of the high physical pressure of one pixel (molecule) against another. This pressure does speed up the changes; at lower agitation rates – most often consequent upon lower temperatures – the mixing will be slower but it will eventually subtend to the same asymptote. So, extrapolating this to energy gradients, the agitation that accompanies the flow of energy (heat is always a by product of this) does provide a driving component as there will be no agitation without some PD to cause it. But the PD itself (energy) is a statistically improbable concentration of quanta that, upon any "agitation", is most likely to adopt a less improbable distribution and revert towards the mean. The process is one of agitation-induced-rearrangements leading to statistically more probable diffuse-distributions. High energy potentials represent statistically improbable concentrated distributions. Spontaneous or purposeful agitation (one example of the latter is ignition by a spark plug) enables a flux towards a more probable diffuse-distribution and this flux, itself, amplifies the agitations. And it does so in a catalytic fashion that continues until the original statistically improbable distribution disperses, asymptotically, towards nothing greater than base random agitations. The most fundamental of the latter are the direct consequence of the uncertainty principle and its resultant quantum foam.

The screen analogy highlights certain things. Every shake (agitation) leads to what is, effectively, a new and absolutely unique rearrangement. (Remember the numbering of the screen pixels, top row, left to right then second row left to right and so on; this precise sequence will almost certainly never return.) But some arrangements mean more to us humans than others. The meaningful ones are statistically more improbable because they require large scale – macroscopic – form; this form will "degenerate" (disperse) unless it is maintained (morphostasis). Eventually, the only variations that are left will be appreciable only at a microscopic level. (An aside:- on a computer chip, although it is small, random mixing at the nano scale would be devastating – it must retain its macro – whole chip – structure and not move away from this. By analogy, our "screen" must remain in more or less the exact numbering sequence that it was manufactured with, otherwise it will no longer function properly.) Macroscopic patterns are progressively lost; at first, the change is towards smaller "units" reflecting some of the structure of the original screen but then, and progressively, every small section of the screen that we examine will possess a local form that lies progressively closer to an average of the whole of the original screen. The greater the number of pixels making up the screen, the more uniform this homogenisation becomes. This break up reminds me of fractal repetition down smaller and smaller scales. We humans find important meaning and usefulness in form and structure; and we know, from experience, that there is a pressure towards degradation of form, structure and its apparent function; these all progressively degenerate. There is a strong analogy with informational entropy. So, the entropic dispersal of energy is inevitably accompanied by the loss of macroscopic form and structure. The total original energy in the closed system persists but it becomes spread out evenly and PDs are now only discernable on the smallest scales (faintly reminiscent of what is believed to characterise the smallest scales in quantum foam).

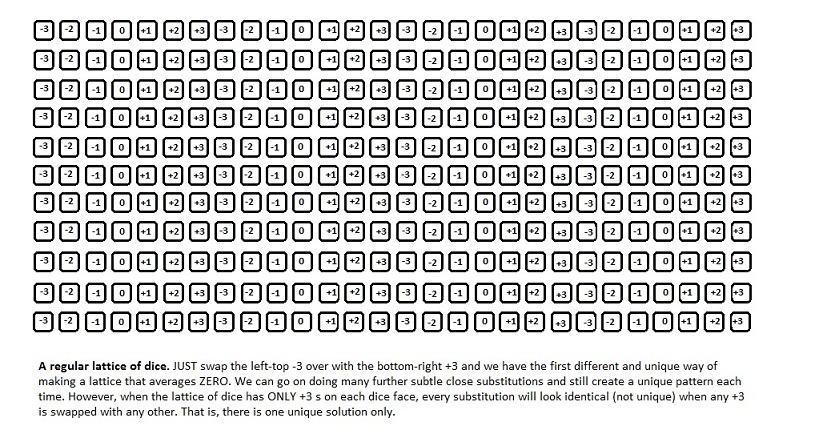

A third analogy shows how heat entropy can be understood (in a statistical way) as the most probable pattern of distributions of molecular velocities in a closed system (or box). As the time passes, we can assume that every new redistribution of these velocities will be unique (exact repetitions are vanishingly improbable); but, when the kinetic energies of a local (microscopic) group of molecules are summed up and averaged, there is a strong tendency for these sums to "move" progressively towards an average of all the different molecular kinetic energies in the whole – original and continuing – system. This "progression to the statistical mean" in all micro-regions happens even though the constituent quanta are undergoing constant change. This change is comparable to the distribution of a lattice of randomly "thrown" dice in a box. We can envisage that, with every additional agitation of the dice, it is improbable that we will find a growing concentration of just 6's in the top left hand corner of the box; this is possible just by chance but this is highly improbable.

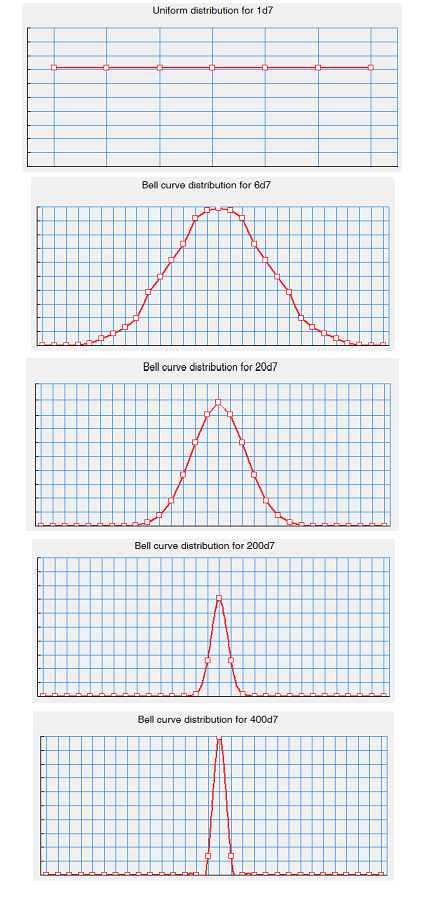

To extend this perspective, imagine two things: first, we'll use seven sided dice with the values −3, −2, −1, 0, +1, +2, +3 which represent the energy levels below, at and above the average value in this box. Ignore the practicalities and assume that they function and can be read as effectively as six sided dice. Second, imagine that the box is shaken regularly and that, each time all the dice come to rest, the distribution of −3 to +3's is recorded. It is easy to speculate that, on average, the system will evolve towards an even distribution of −3 to +3's in any arbitrary subdivision within the box (the total box ending up with close to N −3's, N −2's, N −1's, N 0's, N +1's, N +2's and N +3's – where N is an exact but very large number; and each smaller subdivision of the box will have N/f of each, where 1/f is the subdivision's fraction of the whole box). So the summation of values in any focal region evolves to lie close to the mean of the values in the whole box. (As an illustration point, we could have arbitrarily defined the starting point as all 0's – a highly specific and statistically unlikely distribution but one that returns a summed value that is bang on the mean – so this is an occasion where there is both high entropy and high order and it emphasises that entropy and disorder are NOT synonymous). In small areas occupied by just a handful of dice we are more likely to find wilder fluctuations; these fluctuations "iron out" over the whole box and even do so within small regions of the box. So, at the microscopic level, we will find a distribution like the first histogram below (the histogram on the left). The larger the N (number of dice "shakes" or "rolls"), the sharper becomes the central peak that describes the most likely prevalence and distribution of the local summation of the dice faces (−3's to +3's; this is shown in the histogram on the right). There is more analysis of this in the Endnote.

Energy concentrations within a system are, in effect, just statistically improbable macro-(re)distributions. These are unlikely to emerge by chance – they are usually "arranged" in some way by us (e.g., when Duracell make an AA battery). As soon as suitable "agitations" of the system are triggered (by energy flow; e.g., as we open a valve or flick a switch or energise a spark plug) the microstructure of the system will begin to evolve into statistically more probable micro-redistributions and the original macro-structure progressively "degenerates" (by our view). In our energy-in-a-box illustration, every agitation results in a unique redistribution; probability favours macro to micro redistributions. The commonest distributions are those where the summed and averaged energy in any micro-region of the system "adds up" to a value that gets progressively closer to the average energy level of the whole system. That is, the energy becomes dispersed throughout the system. We might still find big energy fluctuations at the very smallest possible scales, i.e., within groups that involve just a few quanta where such statistical aberrations are not so improbable. In the seven sided dice illustration, the −3 to +3 values are analogous to energy values, and this shows that the total population of energies in the box will approximate, very closely, N zeros (N to reflect the number of dice; zero is arbitrarily defined as the mean: should we have chosen dice with the values +1 to +7 then the mean would be +4). Should the value of N be small, then there would be a much enhanced chance of finding that all of the dice show +3's on at least one occasion – provided the observation period is long enough. However, as we increase N, particularly to the staggeringly large values typical of the number of molecules in a box (let alone quanta/strings in the universe), the distribution of the scatter around the average (which in this dice analogy is zero) becomes more and more sharply confined to a small range that lies close to the mean (see figures). Effectively, in energy gradients, we see that the macrostructures are transformed into microstructures that carry over some of the caricature of the original macro-structure but at a smaller scale (faintly reminiscent of fractals). In the case of a box of molecules, the molecules (analogous to the dice) are in constant agitation (analogous to individual shakes – or redistributions – of the dice) and this agitation cycles at an extremely rapid rate. Three things combine to make the number of possible permutations of the distributions very large. First, there are a huge number of molecules in the box (equivalent to dice). Second, there are a huge number of agitations (equivalent to dice shakes). Third, there are a range of possible values for the kinetic energy of single molecules (comparable to the numbers on the faces on each dice). Our everyday "common sense" tempts us to think that the reason the molecules "want" to spread out evenly is because of the pressure exerted by each molecule on its neighbours. In the case of steam, hotter regions agitate faster and approach the mean faster than cooler regions but the the same mean is eventually attained by both. This statistical approach suggests a novel perspective; it is the number of agitations rather than the rate that leads to this progression to the mean and the "pressure" to change. Furthermore, mathematically, it models the actual situation well. This is how Boltzmann wrote his – now famous – equation for entropy ...

S = k loge W

... where S is the value of entropy, k is Boltzmann's constant and W represents the number of possible permutations in the "microstates" of the system (these microstates correspond to our metaphorical shaking, throwing and counting of the dice – the mobile quanta). "Common sense" suggests that there is no place for a situation in which all the molecules might spontaneously occupy the maximum possible kinetic energy of motion (or, alternatively, the minimum) – the total energy in the box cannot exceed what is there at the beginning – the law of conservation of energy. Statistically, however, this is a definite possibility although vanishingly rare; and the “payback” for this transient aberration is that this "borrowed" event would last but for the briefest possible (Planckian) instant; and, because an equal divergence to the opposite value is just as likely to occur, the net outcome at all but the most minute time intervals is to closely approximate the mean (this is reminiscent of the chance creation of virtual particles in quantum foam)). Now, this also colours the perception of the law of conservation of energy. Energy is nothing other than an improbable distribution of quanta of some kind that "want" to return to the mean – a more probable distribution – and the mean lies close to the total averaged quantal levels of the system. Ultimately, all energy can be translated into an improbably concentrated (compacted) distribution (dance) of photons. I suspect that all the other manifestations of potential, kinetic, heat, chemical, radiation and etc energy are complex manifestations of this basic "photon into matter" compaction (see the section below entitled, "even wilder speculation here"). In quantum foam, there is a rapid (which equates to a very short distance) return to the "mean". The "mean" should be represented by utter nothingness – "nihil" – "zilch" – "non-existence" (a virtual state) with quantum foam lying close by on either statistical side. (Indeed, the uncertainty principle seems to encourage a boiling, yocto-scopic jitteriness either side of nothing. Note that micro-, nano-, pico-, fempto- yocto- are increasingly small. There is more on this general principle in the speculation section.)

Within the closed system of our theoretically perfect box, no energy is added or lost; our definition has proclaimed this. All that happens is that the energy is re-distributed evenly throughout the box so that no usable "macroscopic" PDs remain that are then available to be tapped for our wilful purposes. A useful illustration is to think of a box containing oxygen and a jar of diesel, both in matching quantities that will ensure complete combustion once the diesel is ignited. This initial system (effectively two isolated systems, oxygen and diesel, before ignition) has low entropy and remains in this state until it is ignited (at which point the low to high entropy flux begins). Now, all the diesel burns up reacting with all the oxygen. It now transforms into a state of high entropy where no new work can be "tapped" within the box because equilibrium has been reached and no workable PDs are left (even though the contents of the box are now much hotter). Similarly, 100ml of deuterium in a very large box represents a potential state of very low entropy (sometimes described as negentropy, though the qualifier high- or low- should also be added to this term) which, if a sustained fusion reaction could be initiated, would lead to a flow to a state of high entropy even though the contents of the box have become extremely hot and the pressure very high – or we have decreed that no energy flows out of our perfect system. This energy is, instead, uniformly dispersed – equilibrated – throughout the box. If this box is now "opened" by connecting it to a "sink" that is at a lower temperature, this now-expanded system acts like a low entropy system. Typically, when we talk of low entropy (energetic) systems we usually mean that they contain a source of concentrated potential energy capable of providing an energy flux to do work. They need a macroscopic PD to enable an energy flux. So a low to high entropy shift represents a shift from a locally concentrated to a generally dispersed energy distribution. (Remember, however, that the statistical entropy concept does not concern itself with the form of the original macro-structure; for all it cares it could be a concentration of high energy or a concentration of meaningful, typed words – as in the earlier illustration of a Shakespeare sonnet on a screen.)

All of this should inform us that, in various epochs/parishes of our universe, the low to high entropy conditions may differ. Here, in our parish, iron won't give up fission or fusion energy (it has high entropy). However, my guess is that, if it were possible to transport a few iron filings to the very early universe, they would convert some of their mass into intense radiation through a fission reaction; alternatively, if it were possible to inject iron filings into a neutron star, they would convert some of their mass into intense radiation through a fusion reaction. Both these theoretical fusion and the fission potentials for iron would be typically "low entropic states" when in their respective transposed parishes.

In our own confined spacio-temporal parish of the universe, conditions are a long way from the bland sameness (energy dispersion and equilibration) of most of the universe: particularly of deep intergalactic space (the latter constitutes by far the vast majority our "visible" universe's volume). The average density of the universe (including earth, our Sun, and countless galaxies with their super-massive black holes) is in the order of 1 to 4 hydrogen atoms per cubic metre (it is likely that our universe "today" has a volume of some 1075 – 1080 cubic metres). So, here, on mother Earth and close to our nearest star (the sun) we are hugely lulled into the sense that it is normal and usual to have a strong flux of sunlight (radiation), be under the influence of a substantial gravity and enjoy swimming in densities like water (in the region of 1026 H2O molecules per cubic metre). The constant flux of radiant energy from the sun provides us with a seemingly perpetual flow of energy (leading to our low entropy system). Galactic and solar "time whirlpools" are manifestations of (and emphasise the "force" of) gravity. In effect, gravity leads to an unrelenting one-G "elevator", or "rocket", under the soles of our feet. See if you can work out where the energy comes from to fuel the “work done” by this constant and apparently perpetual acceleration – it is our virtual-rocket-motor; it must be supplied and/or "borrowed" from somewhere. Imagine how much energy you would have to suddenly dissipate if you could arrange to travel back in time some 200 years. Moving forward in time ("arriving in the future") is a well established reality for accelerating objects. The objects arrive back in a non-accelerated parish with less time having passed for them than that experienced by the residents who did not accelerate. This slowing of time is consequent upon a great deal of prolonged acceleration and the energy (improbability distribution) acquired in doing so.

All of these parochial conditions are evidence of our Sun's low entropy state – there is a concentration of energy waiting to be unleashed and to flow out into the grander universe where it can equilibrate and disperse, funnelling its flux through the Earth when this gets in the way. So, we can tap into solar power – directly using solar cells, less directly in the generation of hydrocarbons by plants (biomass) and indirectly by the long term storage of hydrocarbons in fossil "fuels" (fuel being an anthropocentric perspective – the promise of energy gradients that we can tap into to do work). Tidal and wind turbines can be used to tap into these forces (wind is caused by the interaction of solar radiation, gravity and Earth's spin). So all of this local flux of energy originates in a nuclear fusion reaction in the sun and it is even supplemented by nuclear fission (radioactive decay of elements previously "manufactured" in a supernova) within the Earth; these heat the mantle and core. So we experience solar radiation, volcanoes and the biomass. Do not forget the low entropy significance of the oxygen that is generated as a by product of photosynthesis when it "traps" solar radiation for carbohydrate generation.

Order emerges spontaneously wherever there are "workable" PDs. A thunderstorm might appear chaotic to us but chaos theory shows how regular structure can emerge within this "chaos". The trend is: for spontaneous order to emerge: for it to be perpetuated: and for it to be maintained in a morphostatic manner as long as the PD gradients persist. Chaos theory describes how this ordering process may become highly structured. The products have definite form; but this form will disperse if the sources of the energy (PDs that feed these energy fluxes) collapse. These structured forms are often of a fractal nature. So, when an area of high energy interacts with an area of low energy, it encourages spontaneous structuring (order) that will quickly dissipate once the PD "dries up" as the system changes towards high entropy. So, low entropy to high entropy fluxes are essential precursors to the emergence and then decay of order; but it is only in the flux between high and low PDs (a situation that itself comprises a low entropy system) that spontaneous ordering processes emerge in the first place. I find it easier to think of entropy as a level of entropic saturation (very low, moderate or almost total – entropic – saturation) rather than to think of low and high entropy.

So what happens when a highly unsaturated entropy system interacts with a saturated entropy system? Well, this is, surely, just a nonsense question? By definition, our entropy systems are closed and incommunicative (although no real "box" is impenetrable to energy – remember our box of deuterium and imagine what would happen to its walls if the deuterium did "go nuclear"). Therefore, if one closed system is opened up to another closed system, we are forced to reassess the entropic state of the combined new system. (In our parish of the universe, events frequently occur that may quite suddenly change the status of local "compartments".) Unsaturated entropy systems that are suddenly connected in this way will retain at least some level of unsaturation, but two saturated entropy systems could become an unsaturated system (e.g., a cold contents box at equilibrium connecting to a hot contents box at equilibrium). In our solar system, baryonic matter is "gathered together" by gravity. And, it is so far away from deep intergalactic space that radiant energy is the only significant highway for energy to leak out into the great beyond and on to the biggest and ultimate "pit" of saturated entropy in our universe.

The take home message for morphostasis is that structure arises by emergence (linked to chaos theory) wherever there is an available PD of some sort and they often do so in fractal forms. All life depends upon tapping free energy gradients and the work that can be extracted from them. An inescapable characteristic of the way it is possible to "tap" this energy flux is that the ordering process is inefficient – more energy is needed to create the ordered system than remains available for later return. It is necessary to "rob Peter of somewhat more than you must pay Paul" – it is a "greedy, self engrossed" strategy with no "mercy" for the maintenance of the extant and extended negentropy environment. Indeed, it "short changes" this extended negentropy environment in a slingshot fashion (comparable to the way satellites are accelerated out into deep space using a planetary slingshot process; the satellite is accelerated out into the outer solar system at the expense of the planet that is accelerated, admittedly by a miniscule amount, in towards the sun). This inefficiency is due to the "leakage" of energy out of our relatively "closed" system (via heat and its ultimate consequence – infrared radiation).

Fortunately, the energy flux of radiation from the sun is in a substantial excess of what is needed for "tapping off" to power the Earth's biomass "engine". Note how the energy flux from the sun is spreading out, spherically, and (by our perspective) at the speed of light, nearly all of it eventually (if not immediately) heading into the voids of deep intergalactic space. We are "blessed" by being in the path of this flux while it is still potent – but, fortunately, not too fierce. Earth, aided by its biomass, captures some of this flux and stores it. This delays its onward path and eventually "degrades" it to a lower wavelength before its onward radiation path resumes (now it is dominantly infrared radiation because it comes from from a lower temperature source than the sun); ultimately, however, the energy still leaks away as unstoppable spheres of radiation, on its way out into the void, but all at a lower wavelength (a slingshot effect – where local order has been increased at the expense of the wider system). The net energy transfer ("in" over "out") is effectively zero – or we would, by now, be as hot as Venus. The sun's energy has simply been held up in its onward path, and this (probably) enhances our parochial appreciation of the existence and "expansion" of time. The distortion of space, into more than one dimension (the "unwound" energy of an electromagnetic wave "stretched out" through a vacuum is one dimensional), gives substance and feeling to our sense of the "passage of time". If we could travel astride the photonic radiation that went uninterrupted from the sun into deep space, we would be travelling at light speed. From the light "particle's" perspective, we would reach a very distant, low-energy-density-destination (its point of decoherance from a wave function when it interacts with matter) almost as soon as it has started out from the sun. At the speed of light, its flow of time evaporates to Planckian insignificance (there is no trade off of distance into time; unlike an event horizon where virtually all distance is traded into time; since time is essentially measured by "mechanical" vibration of one sort or another, this takes on extra significance) and it would also interpret the distance "travelled" as small. Static observers – waiting at the destination – would, however, still interpret the situation as a stream of photons taking N light years to reach them; but time flow, from the radiation's perspective (still a spread out waveform until it decoheres) is, according to relativity theory, effectively non-existent. They use up no time in crossing the universe.

Inefficiency is the unavoidable blight of heat engines and it is manifest by the inability to tap off all of the potential energy tied up in an energy source. The net energy invested in "structuring processes" is inevitably accompanied by a trash yard of accelerated energy dispersion that is a cost of this inefficiency (ultimately, and via intermediate steps – particularly heat generation, part of this inefficiency is radiated out into the deep yonder as infra red radiation; we will never regain all of the energy that was originally gathered). Although, spacio-temporally, focal order is increased, the net outcome in the wide and future universe is a net acceleration towards entropic saturation and eventually – and in consequence – the gradual decay of structured systems to bland sameness, even bland nothingness, particularly when the radiation reaches deep space. You can appreciate this by considering the entrapment of radiant energy that emanates from the sun. Radiation that leaves the sun and spreads its influence into deep space, without being trapped by the earth, can decohere from a wave function at any point (in its travel it behaves like an extended wave-function, spread out from its origin to its imminent destination; on interaction with a "target" – mostly electrons – it is committed to act like a local particle of energy). The further away from the sun that its rays "travel" (literally radiate out) the faster that local space is expanding (effectively, moving away from them due to the Hubble expansion of the universe). The lower their frequencies become, the lower becomes the energy that they can transfer (they are red-shifted). At some very distant point, there should be insufficient energy for a "photon" energy packet to decohere and so – effectively, it never "left" its origin in the first place; it fails to reach the quantum threshold that is needed for energy transfer. For the "photon" this journey is timeless because it travels at the speed of light. Now, consider a "photon" that is entrapped by the Earth. This incident energy is eventually sent on its way when the earth reradiates it but then it is at a much lower frequency (infra red). Effectively, for delaying its journey off into outer space, the radiation energy is red shifted early and – from our perspective – arrives some time after uninterrupted photons that travel, without diversion, from the sun. However, since the clocks of the uninterrupted photon-wave-functions are effectively "stopped" (from our perspective), the arrival of this delayed radiation from the Earth – provided that the destination is not too far away to prevent Earth's infra-red radiation from decohering – is barely delayed from the wave-function's perspective; it has just red shifted more than usual and its (radiation) sphere of influence should be smaller too. So here is a slingshot like effect.

Bland large scale sameness is featureless and uniform and not necessarily unpleasant to us humans. Think of the uniformity of the sand in an infant's sand table (or on a pristine beach). This sand is, macroscopically, of a uniform sameness even though, under a microscope, each grain may look different. We find this fine sand pleasant and appealing when it is new but within a very short time this pristine (and perhaps we could say attractive) sand soon finds its way off the table, onto the patio, into the house, under our feet and into our shoes. It is starting to disperse within and equilibrate with the broader environment. It accumulates bits of dirty soil, muddy water and lots of "out of place" bits and bugs; indeed, it becomes increasingly messy and unpleasant. Its original, intended function is spoilt. There is no way out but to replace the sand – even the table. Bland pristine sameness does not offend us humans as much as the early loss of informational and functional structure (think of buildings recently devastated in an earthquake – ultimately these could all be weathered down to a pleasant sandy beach). What we humans cherish most is well structured order with lots of informational or functional content (note the well described mathematical parallels between heat entropy and informational entropy). What we dislike most is the bit mid way between low and saturated entropy where functional structure is "falling apart". This emphasises a principle of two extremes. At one extreme we have information poor, homogenised, macro- and micro- bland sameness like deep intergalactic space. At the other extreme, we have information rich, formed, macro- and micro- organisation. To extrapolate the latter to an absurdity, imagine a working 400 km by 400 km computer chip in which every individual transistor faultlessly performs a critical function. Energetic fuels (like deuterium and tritium) might be closer to the second than the first.

Entropy and disorder

I have been watching various videos about "the arrow of time" on the internet and was (negatively) impressed at how often entropy is equated, synonymously, with disorder. There is no doubt – whatsoever – that entropy has an impact on order. So, it is worth getting this clear. Entropy – from the "pixels on a screen" analogy – means that the distribution of the quantal components that make up the system are constantly changing. This means that there is a tendency for the system to become homogenous. Macroscopic patterns tend to disperse to become progressively microscopic patterns and these patterns are – statistically – a far more probable outcome. We humans are very aware of order. Our bodies constitute a system that rips energy from our environment to maintain tissue order and can do so, when things go well, for the best part of 100 years. Our daily life depends upon many systems of order which we ourselves construct – once again by ripping energy from our environment. Ordered systems are, by definition, in a very improbable state and can only remain so by ripping energy gradients from our environment. The total entropy of the open system increases because this ripping of energy from the environment increases entropy disproportionately in peripheral areas as it achieves increased order within the core.

And now for some wild and furious speculation: and an advance apology for treading where I have no right to wander (wonder?)

Do not take any this next section seriously. I have no academic right to be delving here. I have only trifling "expertise" that borders on the non-existent. However, in trying to understand entropy and its relationship to morphostasis, it led me to these ideas. I like this possible scenario even though it could quite probably all be utter balderdash. But, unless others read it, they will not be tempted into refining or trashing it. So, I am going to risk launching into wild and furious speculation, the goal of which is to advocate the idea that matter emerges, accretes and then further evolves from quantum fluctuations – akin to the evolution of complex animals from simple chemical beginnings (but not inevitably or exclusively in what we would interpret as in the 2010 to 2020 "time direction"). It seems to me eminently probable that our universe is generated out of a spontaneous process that enables the creation (and, more importantly, the "persistence") of equal amounts of "positive" and "negative energy" (whatever that may mean – this emerges, mathematically, out of Dirac's equations and "the sea of negative energy" – particularly the point that e=mc2 is derived from e2=m2c4 and negative solutions cannot be ignored). This certainly seems to happen in quantum foam and is manifest and measurable in the Casamir effect. Feynman showed that positrons can be represented (in Feynman diagrams) as electrons that act as if they are moving backwards in time (now-to-year-1900-direction). But what does this "backward in time" stuff mean? That is difficult to grasp when all our experience dictates that time goes just one way. However, we can get some anchor points. A photon (a one dimensional energy-transferring entity – a travelling wave – spread from its "origin" to its "arrival" as a wave-function "until" it interacts with matter) is perceived, by us, to be "travelling" at the speed of light (SoL) when in a vacuum. So, for a (one dimensional – 1D) photon in a vacuum, one metre is the same as 3.3 x 10−9 seconds to the nearest decimal place (3.3 nanoseconds). That is, at relativistic "speed" (for it seems that nothing can go faster) and in 1D (vs 3D or more), a time interval is synonymous with the particular distance "travelled" by light in a vacuum and for an observed photon; it is, effectively, just a distance. So for us, as observers of the photon, a time interval can be measured as a distance or a distance measured as a time interval – provided we know the conversion factor (3.3 x 10−9). Once energy "dives down" into extra dimensions (as it becomes wound up as part of matter) time intervals lose this one to one relationship with the distance travelled by a wave; the "photon" is effectively an energy "transferring" packet carried on a wave. The increasing influence of the passage of time seems to emerge when the standing waves that constitute matter cover less distance than an equivalent 1-dimensional photon. It is as if 1-dimensional energy is "concentrated" (wound up/condensed) into extra dimensions and this energy has to travel "farther" than an equivalent 1-D photon; the rate-of-passage-of-time becomes the product of the ratio of wound to unwound distances (wound into extra "dimensions" or volumes and particularly into forms of stable matter; ultimately, the passage of time – relative to the rest of the visible universe – slows to a virtual standstill at an event horizon). This "winding up" into extra dimensions can be appreciated, in part, by remembering that an electron is "orbiting" a nucleus at a substantial proportion of the SoL; and if we add in electron spin, then there is even more winding and an even greater approach to the SoL. If we regard time as a process that is one property of unsaturated entropic states that "want/need" to unwind into 1D and "return" to the mean, then does this imply that positrons may lead to conditions that favour a "macro-structured" to "dispersed" flow in the opposite direction to our common perception of the arrow of entropy? (vs the arrow of time – this distinction becomes important if we view time as a ratio of wound up to unwound dimensionality as this, on its own, should not specify a "direction"). So, if we could rapidly write Juliet's balcony speech in a bunch of structured positrons, would we expect to see some manifestation of the text being there before we wrote it and of the whole sonnet appearing to be erased as "pen" touches "paper" and finally disappearing completely as the pen is raised? I doubt that this is at all likely. Nevertheless, a reversion to an earlier point in time does seem to be able to "explain" some aspects of quantum jumps (the spontaneous creation of virtual photons and virtual electron/positron pairs seem to "allow" such jumps). I have read of no evidence that suggests there is a reversal in the direction of entropy-flow in an antimatter world – or sheds light on this possibility. Indeed, in an antimatter world, positrons should repel each other, just like electrons and lead on to entropic dispersal induced by repulsing positron shells. So, if we could look down on an antimatter world without interacting with it, other than through emitted photons, time ought to look much the same to us and appear to be running the same way as our time. Perhaps, only when interacting with matter will it appear to induce a time "reversal".

So, if the universe is conjured up out of nothing, is there an "ultimate sink" where the "debts" of positive and negative energies are reunited? This could simply represent a "place" and/or a "state" where very low entropic conditions are dispatched to be "repatriated" and to "balance the books". This may be, more or less, what saturated entropy is all about – an improbable redistribution about and then a "return" to the "mean". It seems to me that this mean is fairly well defined. It is characterised by bland nothingness: absolute zilch, and this could well be a virtual entity (subtended to but never actually reached; this being a fundamental reflection of quantum jitteriness). But, just either side of this mean of absolute nothingness and still very "close" to it is the Planck scale jittery "universe" of quantum foam – and we know that a "balancing of the books" certainly happens there where virtual particles are transiently born and then annihilated. The universe may have been generated out of bland nothingness in the first place – in quantum foam fashion – with the occasional rare chance transition into persistent matter that then continues to emerge. The whole entropy thing might simply be a reflection of the ultimate need for a "time" and/or "place" and/or "state" and/or "mechanism" for "debt payback". Or, from an alternative perspective, it may be a diffuse process/"place"/state where net positive and negative energies "go" to be re-balanced to nothingness – acting, perhaps, rather like a fulcrum. And, lastly, are all processes in our universe moving, willy nilly, towards saturated entropy in what we perceive to be the now-to-year-2100-time-direction (vs the now-to-year-1900 direction)? The maths that models physics suggests that there is no fundamental reason why time cannot run in the reverse direction (we just don't gather much evidence that this happens in our parish within the universe). But, at the quantum level – that is, at Planckian time and distances – we can see evidence of such time reversal apparently happening. "Gravity" (whatever – fundamentally – that is) gathers neutrons and protons closer together with forward time. Looking at a film of gravitational events played backwards, it would look very much like the progressive dispersion of molecules in a box that started out as an intense focus of molecules in one small section of the box (initial unsaturated entropy in the future and final saturated entropy in the past – see the diagram below.) Don't forget that all our day-to-day interaction with matter – even up to very many thousands of degrees centigrade – occurs through the interaction of electron shells (or in an antimatter world, it would be positron shells). Even in hot plasmas, it is only the outer electrons that are stripped from the atom. These "orbiting" electrons lead to repulsions (like "billiard ball" impacts) when the shells approach each other too closely ("at a distance" pairing of electrons with protons negates this repulsion as atoms move apart) and attraction (chemical bonds – where electron orbits become shared). Under "normal" parochial conditions, neutrons and protons (made up of quarks, gluons etc) are "shielded" from an annihilating (or unleashing – see below) interaction with the negative charge of electrons; they lie centrally isolated within these electron shells which they are unable to "touch" and, to me, seem only able to influence our "everyday" entropic-arrow-of-time in relativistic collisions and nuclear reactions. Perhaps this forward and backward in time (forward/backward entropy) "stuff" is simply a product of the various consequences of "stable" associations of "attractive" and "repulsive" "forces". This could point to a clue about the "positive" and "negative" deviations around the mean: it could be as simple as positive and negative electric charges emerging from electromagnetic waves then building into particles. A good anology is given by six sided dice marked with +(1 to 3) on one side and and matched with the same number but opposite sign on the other (−1 to −3). For every dice toss, the top face may have a +3 but it will be accompanied by a −3 on the bottom face. So, electromagnetic waves may arise from nothing as "matched pairs" of equal magnitude but opposite polarisation which could (and will when reunited) truly "annihilate" each other (a nodal cancellation; vibratory nodes are the ones at minimum intensity – even zero – and antinodes are the ones at maximum intensity). Bear in mind that the production of electron/positron pairs from interacting photons is a commonly observed process. Similarly, an electromagnetic wave could be viewed as a negative charge/positive charge oscillation about the mean. The simple reason that time "always" goes one way in our world may be that we occupy an extremely improbable state and the vast majority of spontaneous fluctuations will be towards more likely states which, to our immediate senses, are dominated by electron shell repulsion. We already know that biological evolution selects for a increasing complexity and that this runs counter to the "entropy-gradient-current" that is imposed by a "spontaneous desire" to return to the mean. That suggests that this low entropy "starting point" of the universe may have evolved from emerging/emergent interactions through multitudinous gradual "steps" that are selected for – but not necessarily always in what we would consider to be in the 1900 to 2000 time direction. (We are, I suggest, bamboozled into the need to regard the yesterday, today, tomorrow sequence as a rigid inevitability. But, Einstein has already introduced us to the frailty of such a rigid view of time.) The distribution of entropy about the mean would be high-positive-negentropy to high entropy (the mean) on one "side" and high-negative-negentropy to high entropy (the mean) on the other. And the two should always remain perfectly balanced.

All of this leads to an interesting perspective on energy – which is the capacity of a system to do (or be tapped of) work. A purely statistical interpretation of energy may have very much merit. In this view, energy is just an improbable (concentrated) distribution that "wants" to return to the mean. In classical physics, the conservation of energy seems to be absolute. In quantum physics, so long as the sample population is small, wild fluctuations in spontaneous energy distributions can and do occur (but only on "one face" of the generation process – see above). Energetic conditions (high PDs) simply represent improbable macro-distributions of the "building blocks" that make up the matter structure of our universe (ultimately it would be "strings" if this theoretical model eventually proves to be justified – and strings could represent energy-payback-wave-function-"filaments" – the filaments on which the wave "sits"). These quantal entities appear to demonstrate an "eagerness" to get back to bland sameness – really bland nothingness. And, with so many fundamental particles (let alone "strings") in the universe, this average bland sameness will represent a very narrow – "needle or razor sharp" – mean about which the spontaneous generation of matter, galaxies, stars, planets and humans are unimaginably improbable phenomena. An explanation for this extreme improbability can be borrowed from Darwin. They could emerge, in a gradual step by step process of evolution (an alternative metaphor is "emergence"), rather like the gradual step by step evolution of simple, self catalysing chemical reactions through to mammals. The arrow of time that we associate with our encounter with matter is "dominated" by the immediate "contact" with repulsing electron shells and with the high level of improbability that this evolved system has "reached". We may be missing underlying evidence that certain elements can also emerge through effects mediated by entropy flow in another (not necessarily opposite) direction. Remember the average density of our universe mentioned earlier. The statistical, saturated-entropy-mean almost certainly lies closer to the average conditions of deep intergalactic space than to the average conditions of the parish occupied by us here on Earth. I use "average" because quantum foam is everywhere, even at an event horizon, where virtual particles are believed to be "generated" and polarised to "either side" of it – Hawking radiation. This resonates with the dice analogy where the two opposing sides of a dice have the same magnitude but an opposite sign (they always add up to zero) but this frees them up to be able to have runs of highly improbable values on the "face" up sides of the dice and, of course, also on the negative "mirroring", on the bottom sides of the dice; e.g., think of a run of dice where all turn up as +3s over 20 throws; the face up sides of the dice (and vice versa) don't all have to add up to zero, although probability ensures that they usually do; but the summing together of the faces and the reverse sides of the dice must return an absolute and invariable zero – for this is the way the metaphorical "dice" have been constructed. Such radiation probably stabilises virtual pairs into a "prolonged" persistence of stable matter and for much "longer" (high wound to unwound proportions) than is the norm in the quantum-foam that occurs in deep-intergalactic-space. This might – without deeper understanding – appear to have "violated" our rigid belief that we cannot get "something for nothing". "Empty" space has precious few atoms and it is "populated" mostly by the cosmic background radiation. This is a population made up largely of low energy electromagnetic wave-functions (from our perspective, waves "charging around" at the speed of light if Einstein's conclusion was correct). Every photon will see its "adjacent" photons as point like objects who's passages of time (internal clocks) are stopped or non-existent. These might, in some way, create a net interference-interaction that leads to destructive interference and to a virtual annihilation into bland nothingness. Note that two – otherwise identical – 180 degree out of phase waves can interfere so that they leave no local trace (just as in the troughs of an interference pattern – a true "annihilation"); and there seems no clear reason why they could not spontaneously "generate" waves in a reversal of this process; and, at Planck distances, the "quantum uncertainty principle" could be the thing that "encourages" this to happen. Note the often used idea that matter and antimatter "annihilate". This seems to me to be a misnomer (annihilate is derived from ad nihil – literally "to nothing: absolutely nothing"). However, matter and antimatter interact in such a way that all the wound up energy that is contained in their mass (due to their dive into multidimensionality and a concentration of improbabilities) is unleashed to escape/unravel back towards the mean as one dimensional electromagnetic radiation. Nearly all of this will head off into deep intergalactic space "back" to the statistical mean. It is just an efficient conversion of (multidimensional) mass energy into (one dimensional) electromagnetic energy – not an annihilation of energy. With waves, however, nodes represent a virtual focal annihilation of energy and, if all the low level cosmic background radiation is "projected" back to a virtual singularity (a zero dimensional entity), this might just represent our ultimate statistical mean of a node of "nihil". The peculiar properties of relativity allow for such a contraction in time and space "when travelling at the SoL" to transform what we regard to be objects too far apart to interact, to become effectively a Planckian size and Planckian time interval apart. An implication of this is that, the energy we humans are interested in – the stuff that drives steam engines and nuclear reactors – can surprisingly (to us) emerge out of absolutely nothing (but in distance separated matched positive-energy/negative-energy pairs; energy being a statistically improbably distribution). The ultimate "payback" may be that wave-functions are generated out of "entangled" positive-energy and negative-energy pairs or even time/distance loops whose net product cannot be anything other than zero/nihil. To reinforce this illustration, I will repeat this dice analogy; we need to assume that we are using six sided dice and these have +1 and −1 on opposite faces of the dice cube and the same for +2, −2 and +3, −3. When we just look at the tops of these dice, very unusual – though highly improbable – distributions can and do occur. However, if we remember to include the reverse side of the dice, we have an entangled mirror image (+1 always paired with -1 and the same for 2 and 3: this represents two wave-functions, 180o out of phase with each other) and the "universal books" are always perfectly balanced at a net of zero even though the parochial books can seem – on rare (spontaneous) occasions – to be highy imbalanced. This "entanglement" of the sides is interesting because, the implication of this metaphor is that time/entropy-flow might run one way for the "top" of the "dice" and the other way for the bottom of the "dice".

Could an extension of the principles, well recognised in biology, of evolution, emergence, morphostasis and general systems theory (see this site and publications), lead to a similar emergence and progressive accretion and concentration of the things that succeed and persist in our universe (and also see this article). The metaphor that this conjures up is of the evolution/emergence of stable associations of leptons and baryons as matter that condenses in an increasingly tangled up, multidimensional form out of a sea of 1-dimensional electromagnetic radiation. This condensation requires the close juxta-positioning (but “physical separation) of positive- and negative-energy (associated somehow with electric charges?) which would otherwise "annihilate". However, this use of this word "annihilation" is arguably inappropriate here. Instead, it represents the unleashing of the wound up electromagnetic phenomena that make up the standing waves of matter so that they can escape back into 1-D electromagnetic radiation and, thus, head off equally to all locations in space (the majority of it, therefore, heading off into deep intergalactic space) back to "nihil". And in a matter world the negative charge is on the "outside". Don't miss the significance of the frequent dominance of chirality in the emergence of life and the metaphor that this projects at matter and antimatter. The probability that our galaxy, our Sun, Earth and its life forms spontaneously emerged from nothingness may all be far, far, far, far (etc) more improbable than a Jumbo Jet spontaneously assembling itself in one microsecond from a mixed pot of its component atoms and their particles. This jumbo-jet analogy has been regularly paraded to "discredit" the idea that life can emerge without the aid of a creator. So, the metaphor of Darwinian biological evolution that leads to the gradual emergence and accretion of complexity, could explain how our visible universe might also be the "evolved" (or emergent) outcome of a culture "pot" that allows the emergence and persistence of self ordering systems from the "mega-zillions" of suck-it-and-see possible but individually improbable quantal permutations. These multitudinous permutations seem to be an inherent property of quantum mechanics and they have led to the multiverse assumption. This could also help to explain why our universe seems to be so finely tuned – the Goldilocks enigma. This would be particularly relevant if "tangible matter" is made up of positive(energy)/negative(energy) "building blocks" in stable, increasingly "long lived", associations that, being "wound up" into extra dimensions, actually "create" the impression that there is an immutable direction to the passage of time. (Using the point made earlier, it could be made up of stable associations of positively and negatively charged particles that cannot annihilate each other because there is a threshold barrier – for example, protons cannot escape the nucleus and electrons cannot contract their 2-D spherical-surface-distribution below a basal quantum energy level.) And time quanta might be the inevitable consequence of quantum jitteriness (this jitteriness, at base, is an inability to know exactly where a quantum element is and, consequentially, how fast it is travelling. Both properties could be simply the result of a jitteriness in that element's position "in space" – giving rise to apparent "distance jumps" or "quantum jumps"). I have emphasised, earlier, how systems are constantly "changing" by small increments. It is not a relentlessly progressive change in one restricted direction. Each change is quantal and away from the most "recent" configuration. It could be to a more probable or more improbable distribution. If the current configuration is (spontaneously – ie, without emergent feedback and accretion) vanishingly improbable, then the next change will almost certainly be towards a more probable configuration. Should we move very close to the mean, then we would be much harder pressed to discern which changes are towards "a more probable distribution". So time "forward" may be no more than "spontaneous probability greater". And time "backward" may be no more than "spontaneous probability lesser". At very small (fundamental particle) scales, it is fairly common that a "change" will occur to a more improbable conformation – although most changes will be in the "more probable" direction, leading to a "mass action" illusion that change is always in the "time forward" direction. However, to achieve a macro-scopically improbable state, a whole train of "probability-less-than-50%" events will have to be strung together in sequence. So, time forwards is "macro-probability" higher. Time backwards, is "macro-probability" lower. At the fundamental particle scale, "backward in time" events (probability less than 50%) are relatively common as isolated observations but rare as macroscopically co-ordinated observations (a whole train of under-50%-probability events must here be superimposed upon each other). Note how an "atom" of electon positron pairs can exist in a relatively stable state for brief periods (about 150 nsecs) before "annihilating" (really being "unleashed" into 1-D electromagnetic – photonic – radiation). Events that we interpret as moving forward in time or being a past event could be occupying a common physical province (particularly when we interpret the "ashes" of the Big Bang). Their apparent temporal separation might be much more apparent to us in this parish than they are real to the universe at large. Remember, the passage of time at an event horizon is virtually at a standstill – so our nearest black hole’s time is stuck – more or less from our perspective – at the time that it was first formed; and that could be very close, again from our perspective, to the “big bang”. If we could travel close to the speed of light, it could be a far shorter journey for us to reach this far back in time if we travelled, "forward in time", directly into this local black hole than if we devised a way of travelling "backwards in time" to the moment of gravitational collapse that first formed it. Riemannian geometry (the "stuff" that transformed special to general relativity) can be caricatured in the way two "individuals" can set off in geometrically opposite directions from the North pole then eventually meet each other back at the same pole (and also have a prolonged interaction wherever they cross each other's paths before returning to the North pole). This metaphorical scenario is played out on the surface of a 3-D sphere – the Earth – but on the "surfaces" of a 10- or 11-D structure the scenario is much harder to conceptualise but no less real and probably analogous. Photons seem to head off back to nihil/nothing in a "straight" (1-D) line. Baryonic matter (roughly, the heavy stuff of atomic nuclei) heads off "back" to nihil/nothing in the "opposite" (multi-dimensional) "direction" in an entropic way that we interpret as gravitational attraction but it could equally be interpreted as a "time reversed" repulsion (effectively, high entropy in the "past"). String theory suggests that there may be a manifold transition from an R sized universe to a 1/R sized universe that leaves the two "close" enough together to make that transition. Of course, this seems absolute heresy to us Follow this link folk who are accustomed to thinking regularly in terms of Euclidian geometry. Matter may be, ultimately, a standing wave of entropy-flow forward (electron repulsion driven) and entropy-flow backward (gravity – a dispersive "force" if played backwards in time) electromagnetic waves. If we could miniaturise ourselves, penetrate the external electron shell and enter the atomic nucleus and then sit with a group of neutrons and protons, we might see the flow of entropy begin to flow the other way. We are already aware of this trend, for the passage of time progressively slows inside increasingly large, heavy bodies and we believe it virtually "stops" at an event horizon. So, the passage of time is progressively delayed through a vacuum, hydrogen gas, iron filings, the centre of our Earth, the centre of our Sun, a neutron star and then an event horizon. The passage of time in the atomic nucleus should, by this argument, be very different to that in its shell. So we are, at least, part of the way towards establishing that time and entropy-flow are different for leptons (electrons, positrons etc) than for baryons (protons, neutrons etc).

So might it be possible to "draw out" ("condense out") entropy forward/entropy backward systems from this morass of nothingness close to the statistical mean? This would start as the generation of quantum foam and electron/positron pairs but then lead on to the growth/emergence of constructs that persist for longer – creating their own extended time loops (and increasing improbabilities) in the process. This would be faintly analogous to the emergence and growth of the action potentials, that we observe in visual evoked responses. We see these potentials gradually emerging, almost magically, out of a sea of background noise on each reinforcement. Such forward/backward entropy structures should lead to stable systems of matter. They are progressively folded up into extra dimensions which then act analogously to the oxygen/diesel mixture alluded to earlier. This mixture is relatively stable and energy cannot "flow back to the mean" until some "ignition" mechanism is triggered (a threshold condition must be met – atomically, it is crossing the barriers that hold apart the "positive-energy"/"negative-energy" units that constitute atoms so that the two can at last interact and be unleashed back to 1-D from these multidimensional cages). Ultimately, matter could accrete and emerge to a concentration that is sufficient to "reach" the point where it can form its own event horizon. This might "coincide" with an acceleration in the matter creation process (even of generating that "event" that we consider to be the "Big Bang"). Payback will still be "required" but it has now turned into a "protracted loan" by generating the time delay that (parochially) prolongs it by diving into extra dimensions. Also note that, if increasingly improbable "events" on the "left" of the mean are in some way associated with negative charges and increasingly improbable events to the "right" of the mean are in some way associated with positive charges, then this leads in to a possible mechanism to lock up these improbabilities into "stable" (persistent) matter. The meaning of time at each evolutionary point would, almost certainly, not sit comfortably into our current parochial and unidirectional straightjacket devised for it.

All of this speculation section could well be utter balderdash: I have no credentials that give me a right to interfere. But, to me, it does offer a hint (requiring massive refinement from those in the know) as to how an emergent system might catalyse its own persistence and emergence from tiny beginnings (e.g., electron/positron pairs). It also resolves a number of conundrums – not the least of which is how our universe was born in an "impossibly" improbable state (inflation just pushes this back a step and leads us into eternally budding bubble multiverses). It is important to remember that, at the mean, the passage of time in one direction might be pretty much meaningless to its dominant population. Massless electromagnetic waves are all moving – from our perspective – away from all matter and from each other at the speed of light: two objects moving relative to each other close to the speed of light will judge that the mass and the passage of time of its opposite traveller are supermassive and slow clocking; if one object can instantly decelerate into its opposite's inertial frame then it will consolidate that mass and clock status into the new inertial frame. We see this in the observations of muon decay following their creation in our atmosphere: their "clocks", while travelling close to the SoL, are, by our inertial-frame-yardstick, running much slower than ours (although, in their inertial frame, they would perceive our clocks as the ones that are running slow). Their average decay time is at their clock rate (as perceived by us) – so, when entering then decelerating into our inertial frame, this perceived slow running nature is actually consolidated into our frame. The decay of muons is now much delayed, leading to a much deeper penetration towards sea level than Newtonian physics would predict. Should a particle travel even closer to the SoL, the distance it "travels" after decay would increase dramatically (and asymptotically) because, relative to us, its "clock" is virtually stopped. [Interestingly, the energy packet of a photon can instantly decelerate and even, instantly, reflect the opposite way.] Just either side of the statistical mean, time intervals are so short as to be virtually non-existent – this is represented in the "quantum foam". And, a similar distortion of the passage of time occurs at an event horizon – but here the passage of time relative to everything else in the universe falls to a virtual halt. It may only be within the spiral arms of galaxies that a dominant population arises that "feels" the segregating influence of the rush of time, as we "know" it, but only so long as it remains confined in its local parish. The microwave background radiation consists of sets of wave-functions that are spread out from their "origins" to their "arrivals" until – and if – they decohere (materialise; or, to emphasise the point, matter-ialise). All of the passage of time (forwards and back in equal – about-the-mean – amounts, even though we only appreciate the "forward") would, by this perspective, become a parochial perception due to the ratio of wound to unwound filaments "emerging" from the quantal uncertainties that somehow lead to stable entropy-flow-forward/entropy-flow-backward systems. So, "forward in time" effects may be somehow "associated" with negative charges and "backward in time" effects somehow "associated" with positive charges. This dive into extra dimensions is accompanied by a progressive trade off of distance covered for an increase in the "passage of time" (effectively, "farther" to travel). (Also 1-D quantum jumps should be much "larger" than 9-D quantum jumps and this might be reflected in the trade-off of distance into time as we dive into extra dimensions; in extremis, these 1-D jumps might be across the universe and it is tempting to equate these to the behaviour of photons.) Remember, the earlier conjecture is that forward and backward entropy systems could occupy (from our perspective) the "same" space but, when recorded in forward time, one acts as if it was under dispersive entropic pressure and the other under accretive entropic pressure: and vice versa when recorded in backward time. The apparent arrow of our time, for us, may be dominated by our interaction with repulsing electron shells. Stable associations (both nuclear and chemical) arise when, "at-a-distance", net-charge-balancing leads to relatively stable systems.

Follow this link to go to "mega-conjecture".

This is totally wild and undisciplined speculation. But, be warned, it is in the realm of see-sawing conjecture; much of it still needs to settle in; and much is likely to be eventually ejected. None of it is written by a physicist. None of it should do anything other than tempt you to develop your own thoughts around this arena. This is even wilder speculation with further ideas on "A universe from nothing".

Concluding bits and bobs

This way of thinking about the "system" can go on and on but a deeper "dive" into more speculation would be inappropriate here – I have certainly gone way, way, way out of my depth already and far beyond my competence. But perhaps ...?

(Note: it is impossible to formulate any idea without a verb – which is a doing/temporal agent. So, it is important to bear in mind that the passage of time may be the conceptual millstone of our parish. For the wave-function world, everything, that-is-was-and-will-be, might just "be" and all temporal rules and vetos may simply be the product of our particular parishes. This is why so many expressions are in inverted commas; they warn us to keep the metaphors loose – they may represent a useful analogy but don't tie them down too literally and too tightly.)

Nb: I have just noticed a web site that states "the universe has low entropy". Surely no !!! I have already noted that the vast majority of our universe consists of deep intergalactic space which has about as high an entropy state as is conceivable without being totally "saturated". This highlights our parochial preoccupation with the "big bang" (the "initiator" of matter condensation) and our local environment, here on mother earth – basking in our sun's radiation.

And that last comment leads to a further observation. Low entropy systems (eg, like all the molecules starting in the bottom-front-right-hand corner of a box) inexorably flow to high entropy systems as homogenisation occurs. However, that flow is rarely smooth and even; it is usually chaotic and this leads to relatively stable patterning. In this chaos we frequently see an uneven progression to homogenisation in which spontaneous ordering patterns (eg, eddies) occur (eg, look up Rayleigh-Bénard convection). Thus order is an inevitable accompaniment of low to high entropy flow (high focal energy to low dispersed energy). Ordering needs a potential difference of some sort and it can (and will) emerge simply due to random inequalities in the flow towards total homogenisation. There is a tendency to "slingshot" inequalities where some elements are levered out (slingshot) to a higher potential at the expense of others that are hurried in to lower potentials. It is likely to be fractal in form. And this order will finally dissipate as the final gasp towards achieving total homogenisation is approached. On mother earth, the potential for order comes from the focal (ie, uneven) proximity to a potent energy source (the sun). Mother earth simply interrupts the flow of photons that radiate out into deep intergalactic space. In doing so, we living organisms effectively utilise this local high frequency (low entropy) radiation, order with it, then dump the radiation at a prematurely low frequency (infra red from heat radiation). This gradient has last at least 4.5 bn years so far. So, unlike a steam reservoir (to drive an engine) that can soon be spent, this energy flow is seemingly limitless to us. It would have taken much more distance for an uninterrupted solar radiation to reach the same low frequency as that emitted – at night – by mother earth after it has "milked" the sun's "free" energy gradient.

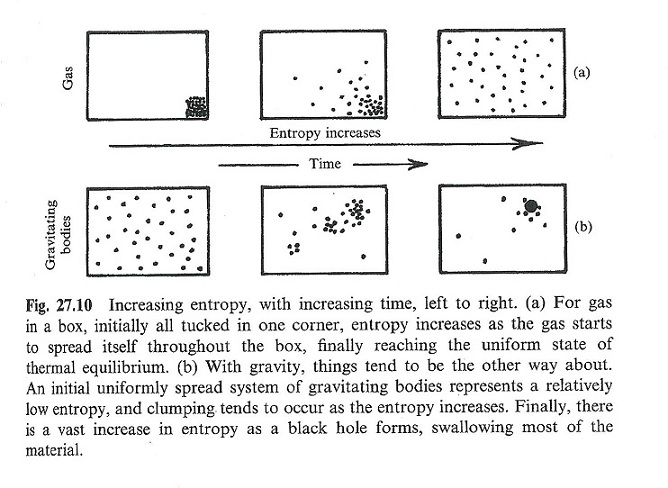

This diagram is in "The road to reality – a complete guide to the laws of the universe" by Roger Penrose p707. Note that he does not suggest that the entropy is "backwards in time" for gravity.

Endnote: The reason for this contraction of the distribution around the mean (as N increases) can be understood by considering the number of different ways there are of ordering a group of molecules (or dice) so that they add up to a particular value (or number). The total face value of the dice will be:

[all +3s]+[all +2s]+[all +1s]+[all 0s]+[all −1s]+[all −2s]+[all −3s]